Pseudo Haptics

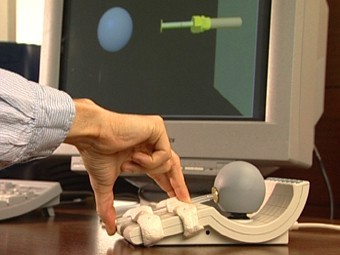

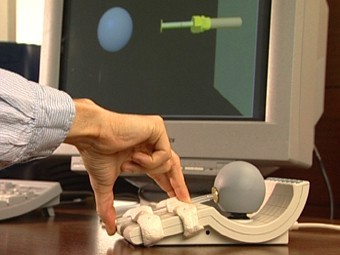

In this project, we used a sensory conflict, an “illusion”, to give a sense of physicality to virtual objects. These sensations were generated without any haptic device, just by manipulating the image of the user’s own limbs with respect to virtual objects in a mixed reality environment.

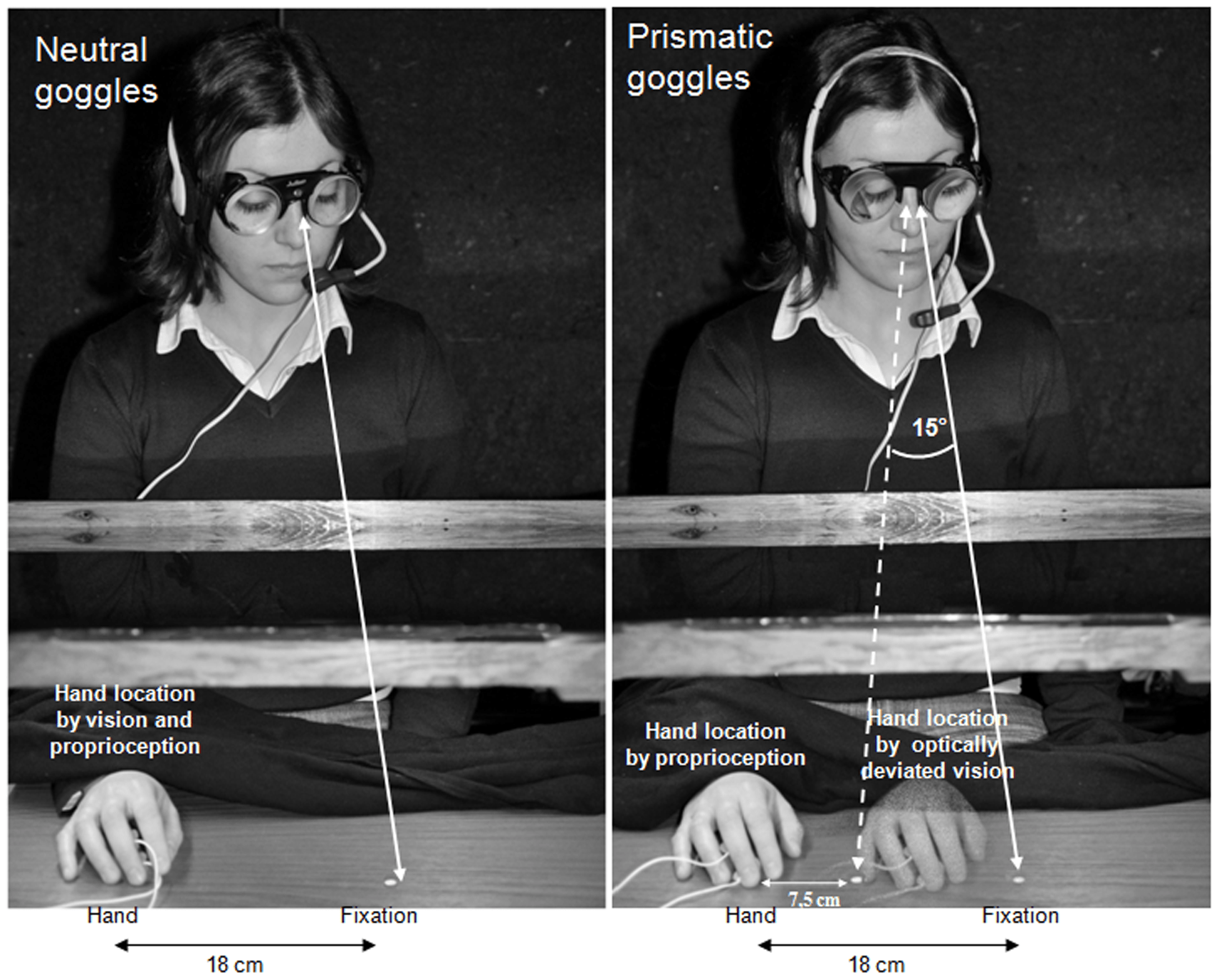

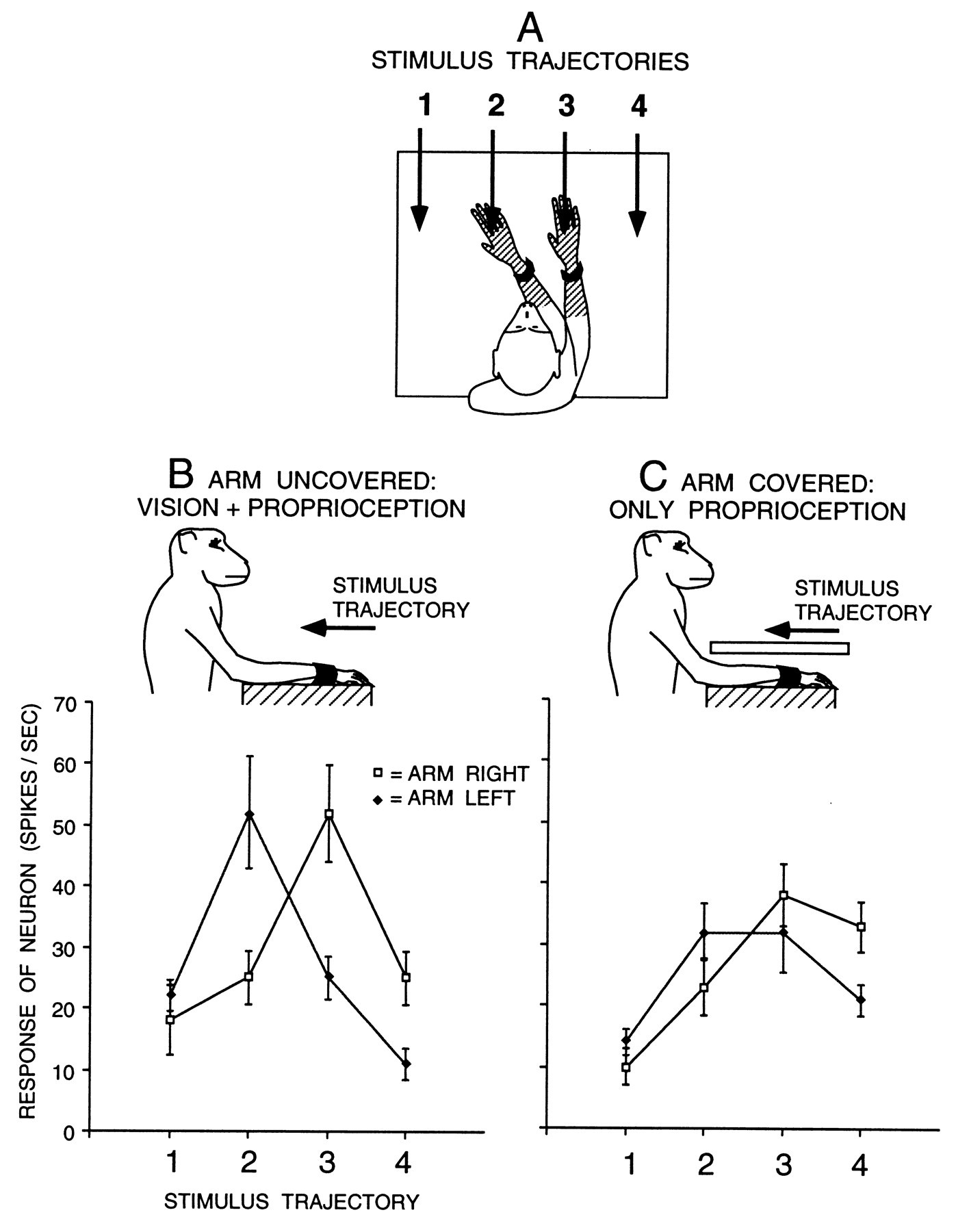

Vision and Proprioception (the unconscious perception of movement and spatial orientation) provide us with information about the position and configuration of our limbs. Information coming from these two channels may be slightly different, and sometimes incoherent. In order to deal with such sensory conflicts, our cognitive system has to give a weight to each information source. In some cases, vision dominates proprioception : we believe more strongly in what we see than the internal information provided by our joints.

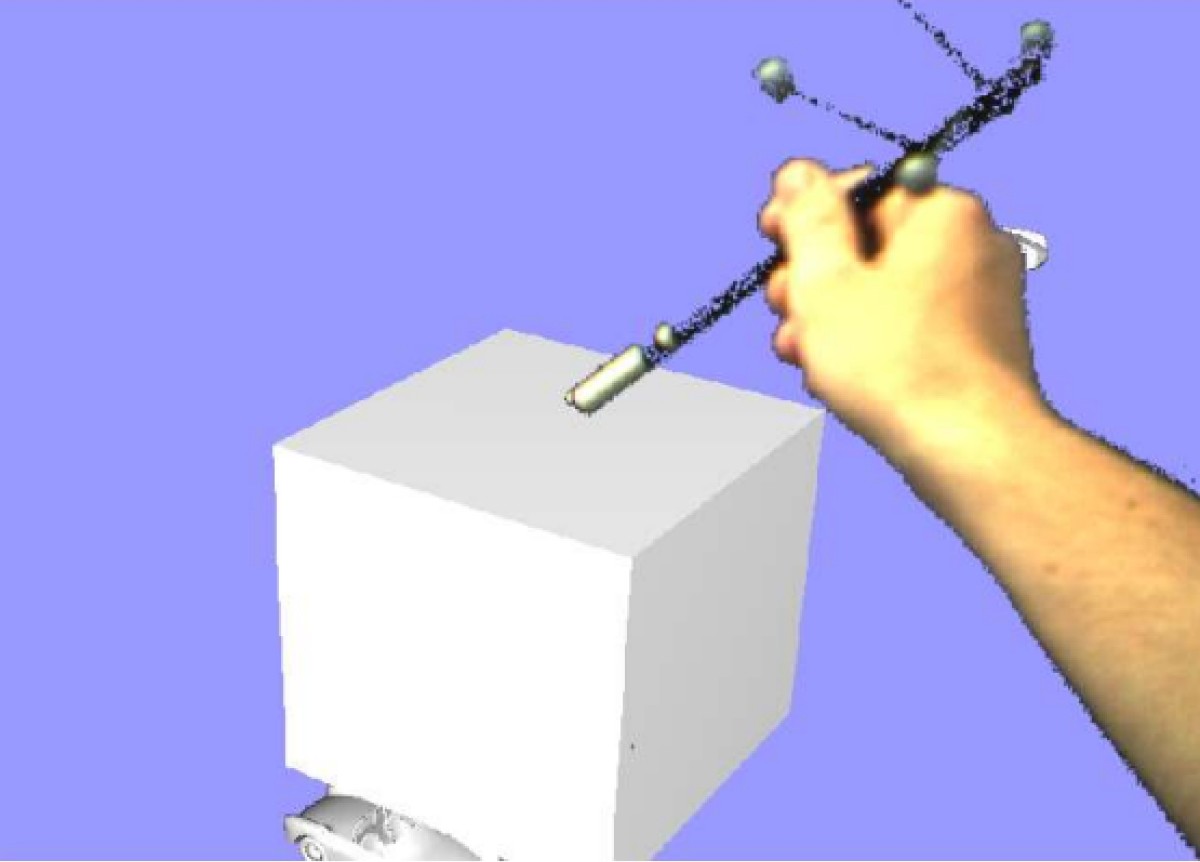

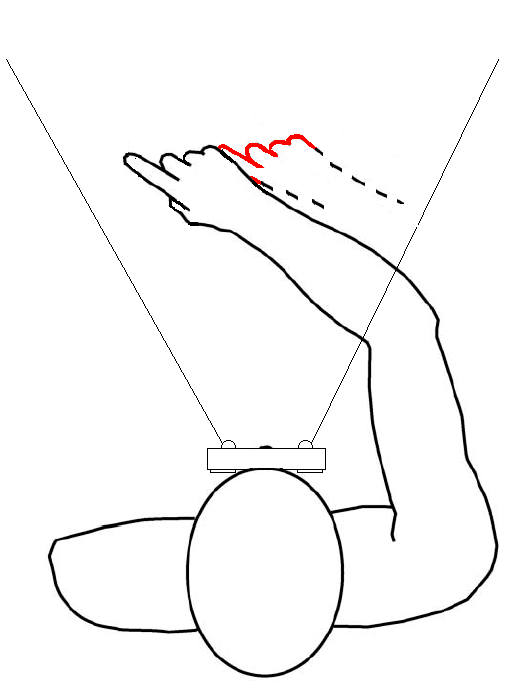

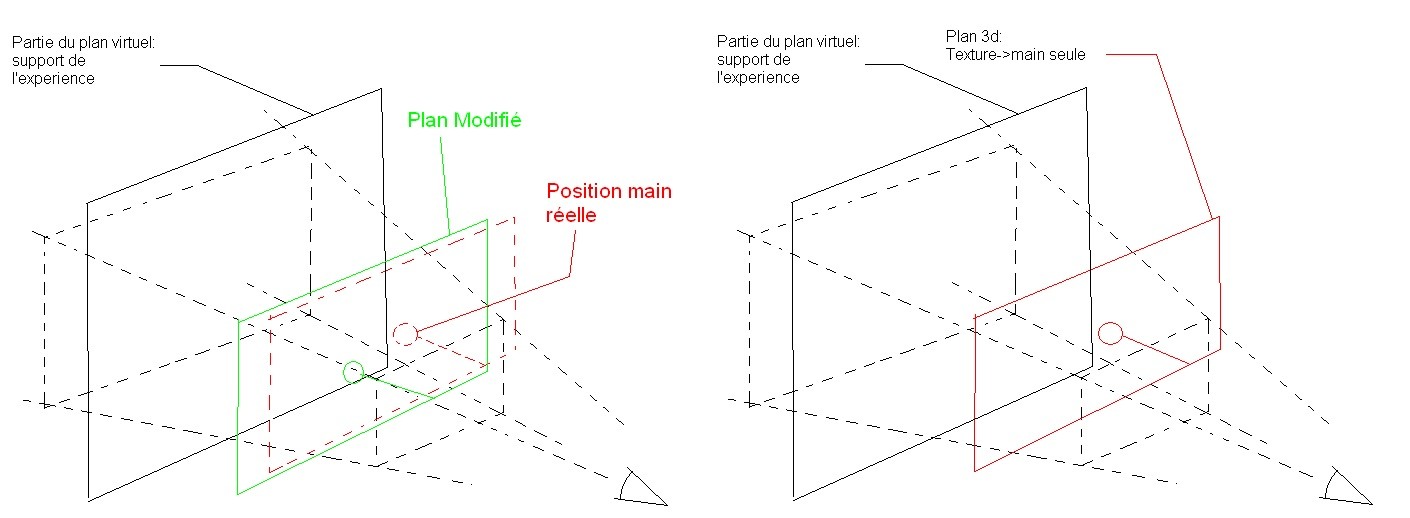

Our team designed a virtual environment to create and control such conflict by modifying the image a user perceives of his own body. The final goal is to provide users with pseudo-haptic feedback in a virtual environment, i.e., giving a sense of physicality to virtual objects without any external force feedback device, but rather by only modifying a user’s perception of his body relative to the virtual object. For example, the user can enter in contact with a virtual cube using a stylus. The system displaces the image of the user’s hand, so that the stylus never crosses any face of the cube. The image of one’s limb perceived by the user is in conflict with its actual physical position, providing a sense of physicality to the virtual cube.

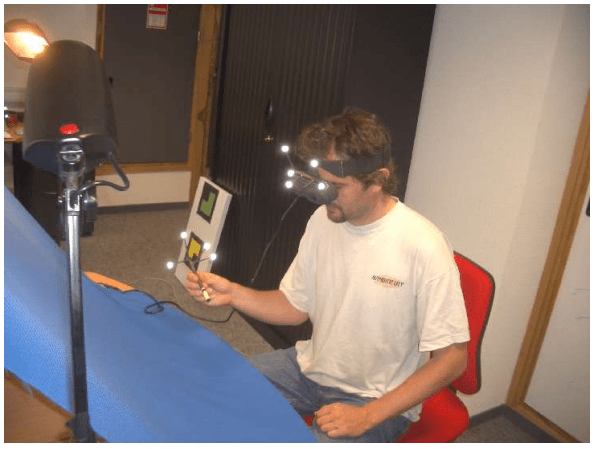

The system we developed allows us to integrate and modify elements from the real world in a virtual environment using a video see-through Head-Mounted Display (HMD). This HMD is used to capture and modify the scene from the user’s perspective. Using simple background subtraction, the system allows the user to interact in a virtual environment while still being able to see his hands and arms. The stylus is tracked using a Vicon camera system. When the stylus enters in contact with a virtual object, the image of the user’s arms are translated virtually in a way that the stylus never enters the object.

Contributors

This work was conducted in 2005 with Michael Ortega and Sabine Coquillard within the I3D Team at INRIA Rhone-Alpes, Grenoble, France.

Additional Material

Rapport de stage INRIA (2005)

Project overview in French (2005)

References

[1] Early studies on Pseudo-Haptics by Anatol Lecuyer

[2] Folegatti A, de Vignemont F, Pavani F, Rossetti Y, Farnè A. Losing One’s Hand: Visual-Proprioceptive Conflict Affects Touch Perception. PLoS ONE (2009) .

[3] Michael S. A. Graziano. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proceedings of the National Academy of Sciences (1999).